MTA Essay on AI featured in Wayfare Magazine

Wayfare Magazine’s latest online issue features contributions from various LDS thinkers on artificial intelligence, including an essay by MTA President Carl Youngblood, “Algorithmic Advent.” Carl explores the existential risks humanity faces during the emergence of artificial general intelligence (AGI), and shares several relevant insights from Mormon theology. In addition to this groundbreaking article, Carl recently participated in a panel discussion at Writ and Vision bookstore that was also transcribed for Wayfare. Be sure to check out the posts and add your comments to the discussion.

Wayfare Magazine’s latest online issue features contributions from various LDS thinkers on artificial intelligence, including an essay by MTA President Carl Youngblood, “Algorithmic Advent.” Carl explores the existential risks humanity faces during the emergence of artificial general intelligence (AGI), and shares several relevant insights from Mormon theology. In addition to this groundbreaking article, Carl recently participated in a panel discussion at Writ and Vision bookstore that was also transcribed for Wayfare. Be sure to check out the posts and add your comments to the discussion.

AI in the News

AI has been a hotly debated topic in the news lately, including among religious thinkers. The editors of Commonweal Magazine expressed support for Eliezer Yudkowski’s recent call for a moratorium on AI research championed by several industry insiders, voicing concerns in other articles over the proliferation of mediocre AI-generated content and how humans may increasingly become the servants, rather than the masters, of technology. Writing for Plough, Jeffrey Bilbro worries that over-reliance on AI may be a Faustian bargain that will cause human abilities to atrophy. Jessica Mesman, associate editor at The Christian Century, fears that because AI is trained on human behavior, “it will tend to replicate our worst flaws.”

A more positive perspective was shared in Comment by Walter Scheirer, professor of computer science and engineering at the University of Notre Dame, who encouraged people to avoid sensationalism around the topic of AI by “being generous with our time to help others understand the positive uses of newly emerging technology.”

Transhumanist-adjacent secular publications also had interesting things to say. Noema Magazine explores the limitations in current generative AI services, and worries that fear about AGI takeover may be distracting people from more urgent near-term risks posed by the current state of the art. Palladium argues that “AGI is both possible and deadly,” although it cautions that the present AI investment cycle may be overhyped.

On personal blogs, two posts that we found especially insightful came from Peter Lewis and Venkatesh Rao. In “Of Fish and Robots,” Lewis points out the difficulty of assessing sentience, along with the flaws in using it as the primary determinant of whether a given behavior is ethical or not, claiming that how we treat AI and other borderline sentience says a lot more about us than about the thing being treated. In “Text is All You Need,” Rao observes that the most distressing aspect of ChatGPT’s arrival seems to be how effortlessly it mimics human-like behavior, resulting in either a trivialization of the human or an exaltation of the technological, both of which dramatically narrow what was once a wider gulf.

From the Archives

In “Open Thou Mine Eyes,” presented at our 2018 annual conference, Chris Bradford calls our attention to the danger of “a purely technological approach to the world,” where the increasing technical abstractions around human activity can cause us to become isolated from other people, or worse, objectify others rather than recognize their agency and inherent dignity. Bradford explains how a religious perspective on transhumanism can provide an important counterbalance to these tendencies. This message seems especially relevant to current concerns surrounding artificial intelligence.

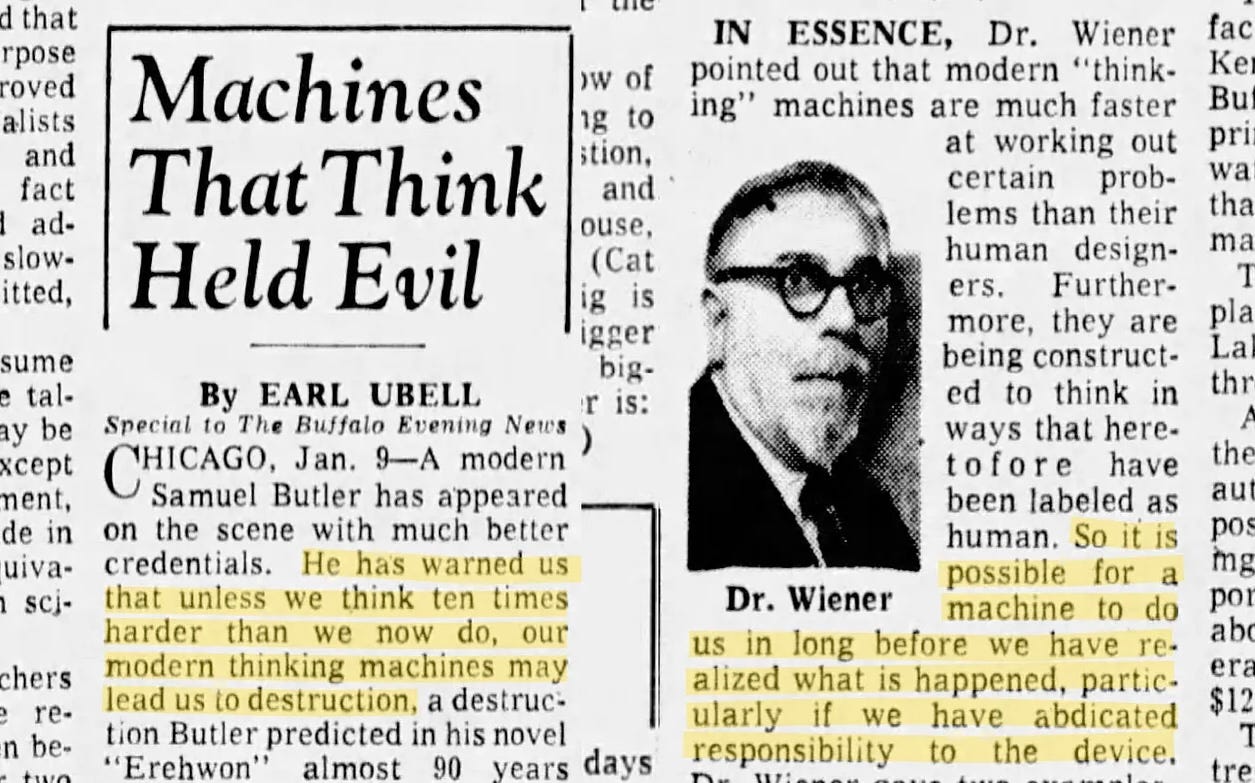

Back in the Day: The Original AI Doomer

We recently discovered a delightful source of humorous historical anecdotes reminding us that prophecies of technological apocalypse are not new. The Pessimists’ Archive, whose logo is a half-empty glass, is an entertaining foray into the fear and loathing of our forebears. This article revives the prognostications of Dr. Norbert Wiener made in 1959, whose jeremiads seem remarkably similar to those of Eliezer Yudkowski, the author of the recent op-ed in Time calling for a moratorium on AI research. Dr. Wiener's warnings cite the predictions of an even earlier figure, Samuel Butler, made in 1863, while claiming that although Butler's warnings did not come to pass, things would be different this time (in 1959) because of how much faster machines had become. We found this blog to be both amusing and helpful for gaining needed perspective on technological advances.